In my previous post I talked about what I believe to be the key to implementing a successful automation strategy which was building on good foundations using a process that encourages communication and collaboration between team members in order to define your requirements in a more automatable way. This process is known as Behaviour Driven Development (BDD) and it defines a structure for your stories and more importantly your acceptance criteria which promotes re-use and makes the application of automation a lot easier in the long term.

When teams start to implement automation they often find that they find it difficult to deliver completed automation tests within the same time frame as the developed code, for example a two week iteration. I think this tends to happen when the team are not working closely together to achieve the goal of automation and you will tend to find that testers are “waiting for developers to complete the code” before they begin writing the tests.

In my mind one of the most important things you are trying to do when defining your scenarios is to think of as many possible ways in which the feature may be executed, you are trying to brain storm all of the possible permutations of the feature and capturing them in simple one line scenario statements. Another important thing to note here though is that whatever scenarios you come up with initially do not have to remain fixed for the entire iteration, you should be able to manipulate them and the combine or split them where appropriate when going through a formalisation phase – provided that you continue to communicate that with the rest of the team!

Laying the foundations

During the early stages of implementing automation there will be a fair amount of setup required in order to get just a single test running from end to end, some of the things you need to consider are:

- Do you have a Continuous Integration (CI) server?

This is an absolute must have, if you are not running your tests on a regular basis and immediately responding to broken builds then you will not be gaining the full benefit of implementing test automation – I would definitely recommend Team City by Jet Brains for this - Is your product deployable automatically?

One of the first questions you need to ask is whether your product can actually be automatically deployed to a test server during the CI build. If not, what are the steps that you will need to take in order to make this happen – talk with the other developers on your team and plan to get this done as soon as possible - Do you have a dedicated build server?

Acceptance Tests, especially UI based tests, can be impacted by conditions on the server. Simple things such as how long it takes to open IE, FF or Chrome can cause tests to break and give you a false negative. By running your acceptance tests on a separate server you are giving yourself the best chance of making your tests stable. If your tests break too often due to environment issues you can quickly lose the confidence of your development team and eventually they will no longer trust the build results. - Can all members of the team run tests at any time?

Having thousands of tests running over night on a remote server reporting failing tests is pretty good for nothing if it takes a developer hours to recreate the problem, when building your test set consider all of the potential running modes such as whether it is on the build server or it is a single developer trying to isolate a problem. Think about how you can automate the setup of the environment for running the tests in a couple of clicks - What is your data set management strategy?

The nature of your problem domain will largely dictate this. You are generally aiming to produce tests which execute as quickly as possible but with as much coverage as possible. Deciding how you setup and tear down your data set will play a significant role in the time it takes to run each test. If all of your tests are date dependant and require modifying the current system date it might make sense to organise your test steps to execute over a common timeline to get the most test coverage over the shortest test time – backing up and restoring a database can take a significant amount of time and can quickly become a non-starter. An alternative might be to make each test responsible for creating it’s test data and clear up after itself. - What is the skill set of the team?

This will have a big impact on the tools that you might choose to implement your automation strategy. I prefer to write tests in the same programming language that is used by the production code and in my case this is C# but you could look to Ruby or Python or some other language that your team feel comfortable taking on. My theory behind using the language of your production code is that you should already have “experts” in this language within your team that can provide support when building your automation framework and you may also gain more traction and “buy in” from them. Using a different language can potentially further exacerbate the problem of separate test and development teams but if your team is up for a bit of ployglot programming then go for it. - What automation tools do you want/need to use?

This may well be led by the skillset of your team but there are a vast array of options when it comes to choosing automation tools in my experience if you are automating web applications then you cannot go far wrong with Selenium 2 Web Driver, WatiN or WebAii, for Windows or Silverlight you could look at Project White or if you have a fair bit of cash to throw behind it you could look at Coded UI and Visual Studio Lab Manager or a multitude of other tooling options. In my next post I’ll talk about the tools I have been using.

These are just some of the things you might need to consider and you will need to assess your own environment to identify any of the key blockers to making automation happen – once you start on the road of automation the overwhelming expectation from your bosses will be that “it just works” and provides value for money and a good return on investment so you to need to remove any impediments that might prevent this from happening as soon as you find them.

The importance of Given, When, Then

In my mind using Given, When, Then to describe a ubiquitous language provides the corner stone for building automated tests but the challenge tends to be getting everyone to talk in terms of that ubiquitous language and this can take longer than you might first expect. It seems that although using these simple words, from the English language combined with whatever terms are relative to the business, can still be considered alien because of the structure of the paragraph. There is still a need to “manipulate” the requirement into the “automatable language of the business”. What this means is that it may well take several iterations before everyone just “gets it” and you can become truly productive because there is no more learning or debating about the structure. So do not get disheartened when it feels like you are not making traction, there will almost certainly be an uphill struggle to convince everybody that this is the way to go but once you reach the summit it will be worth the effort.

So don’t expect everybody to just “get it”, as with any new technology, things take time and different people take different amounts of time to truly grasp the underlying concepts. Begin by drip feeding the concepts of GWT acceptance criteria and then look to automate a single end to end test using it and build from there.

I cannot stress enough the importance of using Given, When, Then when defining automated tests. Each step type plays a key role in enabling the test developer to break down the scenario into manageable, reusable fragments of functionality which can be combined to form numerous other scenarios to ensure greater coverage of the system. I have tried to explain what I think the various step blocks in the GWT structure are for in the following breakdown of Given, When, Then steps.

Phases of the Iteration

In Agile we are looking to get things done and accepted as early as possible in order to gain feedback and continuously deliver working software without risk of destabilising the product. The following is a breakdown of some of the key stages prior to and throughout an iteration that help to make automation happen.

Pre-Iteration Planning

- Story Definition - Customer Team works with Stakeholders to get basic Story definition

This happens prior to the iteration starting and should include sufficient acceptance criteria to estimate, at this point I would look to capture key information points in an informal manner that help to promote the right discussion of the story when moving into the elaboration phase

During the Iteration

- Story Elaboration - Product Owner, Testers and Developers work together

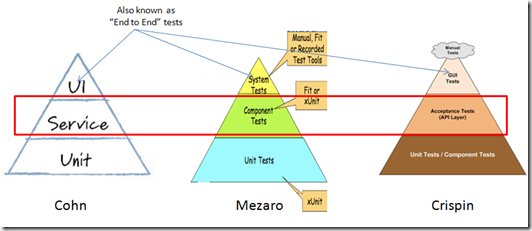

During planning and the early stages of the iteration scenarios are discussed in detail and evaluated for how best to accept the story – this requires an understanding of what unit, acceptance and end to end tests will be written - I would generally expect at least the high level scenario titles to be defined at this point to give a “feel” for the number of scenarios associated with the feature, we do not necessarily need the full GWT break down and this could be done by smaller teams or pairs - Get to work

After working together to to elaborate the stories the Product Owner focuses on getting answers to any initial questions raised. Taking each story the team should start to white board/blog/wiki designs discussing implementation strategies to gain agreement of the right approach to deliver the requirements. After this testers can set to work on creating “failing” acceptance tests including developing the underlying supporting framework and Developers start developing “failing” unit tests and implementing the required business logic. You should be able to identify key interfaces that allow the developers and testers to work on separate parts of the same feature at the same time and then tie it all together as and when it makes sense to - Review - Daily Stand Up

As the understanding of requirements increases and code begins to be implemented the failing tests should start to go green, Developers continue to implement scenarios to make more tests pass, Testers begin to do more exploratory testing and communicate any undefined scenarios found with the Product Owner to determine whether or not the scenarios should be supported in the current version - Revise

As more tests go green the code should be modified and refactored where appropriate to ensure code quality is high and maintainable whilst keeping tests green. The Developers should be putting more effort into testing towards the end of the iteration, the code to implement a feature should be done as early as possible. If you are checking in code to start a new feature in the last few days of the iteration then you should seriously consider whether it will be feasible to complete that story end to end – would your time be better spent making sure the current product you have is truly “ready to ship”? - Done

All UI, acceptance and unit tests go green, manual exploratory tests have been done and application is considered “ready to ship”

Summary

When you first start to implement an automation strategy (especially on a brownfield project) try not to set yourself up for a fall by committing to too much, the introduction of automation will be an alien concept and a complete paradigm shift to most people. This will take time for them to “get their heads around”. Try to start by introducing the concept of automatable requirements and get the team talking in those business terms and look to configure your environment to support automation. Plan in setting up of your continuous integration and build server configurations and start to get your team up to speed on your chosen technologies.

Next Steps

Take a look at your current development environment, is it geared up to start automating immediately – if not, what is missing and what are you going to do about getting it sorted? In my next post I’ll take a bit more of a deeper dive into tool sets of choice and how to apply them to create a maintainable test set.